Archive for the ‘Office 365’ Category

PowerShell to Add a user as admin on all site collections in SharePoint online

Also explained in my Youtube Video : https://youtu.be/icx_m15UIMg

You can modify this script to add these users as a member of visitor to all Site collections.

Connect to SharePoint Online

Connect-SPOService -Url https://contoso-admin.sharepoint.com

Get all site collections

$siteCollections = Get-SPOSite -Limit All

Loop through each site collection and add the user as an admin

foreach ($siteCollection in $siteCollections) {

Set-SPOUser -Site $siteCollection.Url -LoginName “user@contoso.com” -IsSiteCollectionAdmin $true

}

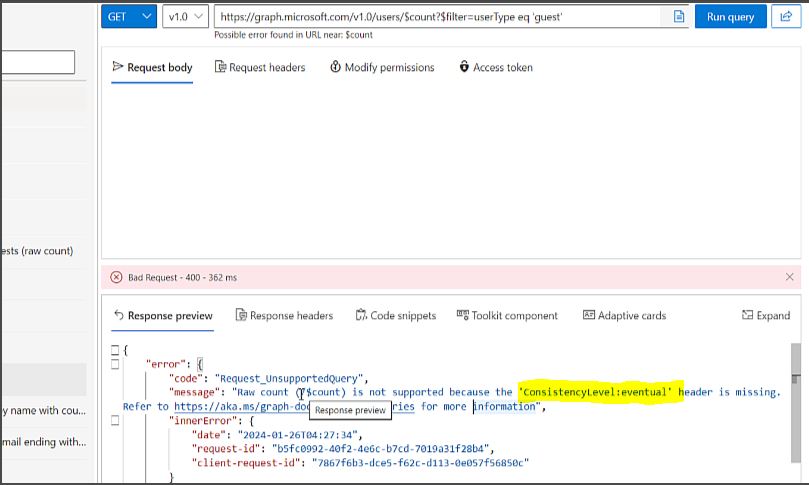

ConsistencyLevel:eventualError when using graphs API ‘count the guest users in your organization’

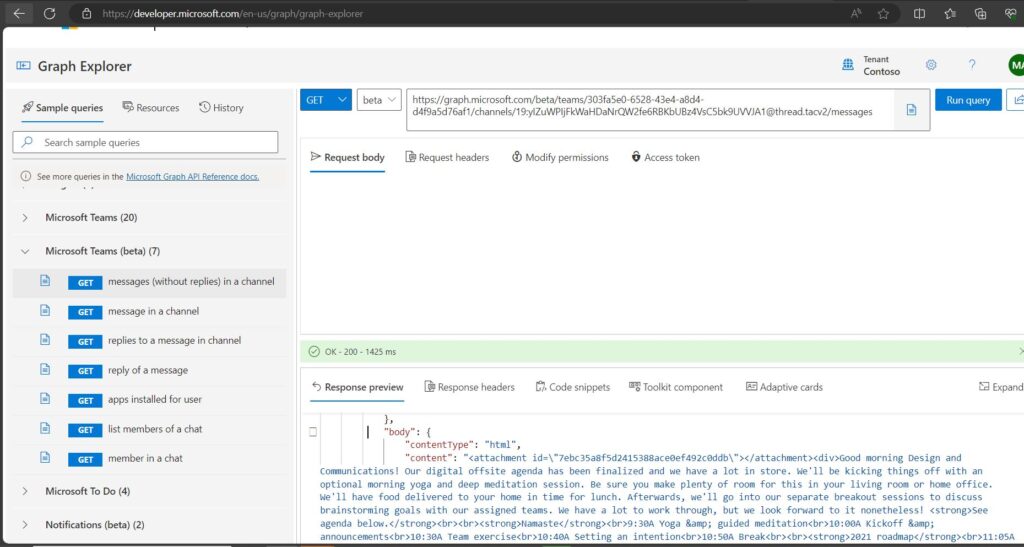

Read Microsoft Teams messages using Graphs API

Explained the same in Youtube Video : https://youtu.be/Uez9QrBNNS0

So today I had a requirement to create a custom app where I can read all MS teams message and use AI to reply to the team’s messages.

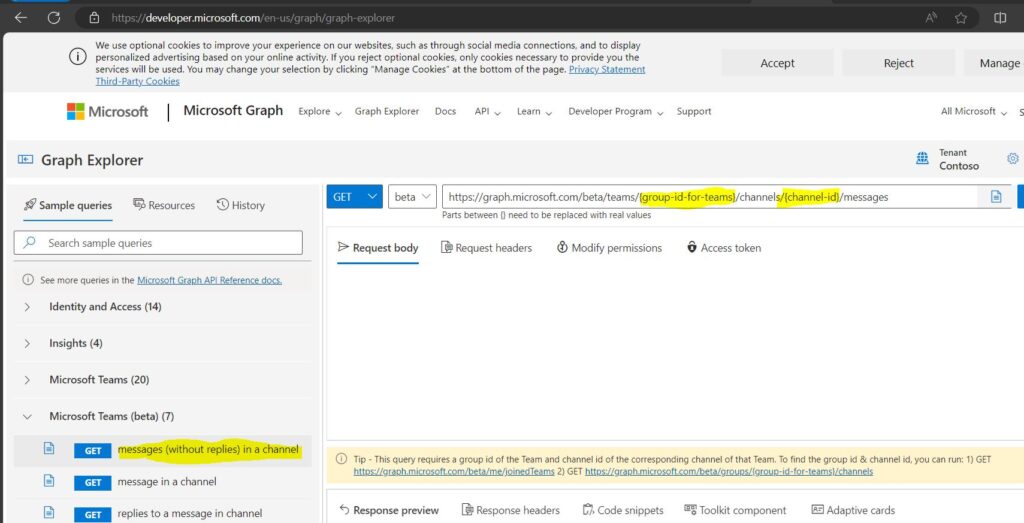

The 1st thing we need is to get read all Messages in Microsoft Teams using a Graphs API. I tried to look for option and finally got a Teams Beta API named ‘messages (Without replies) in a channel’ but it needs GroupID and ChannelID to read to the messages.

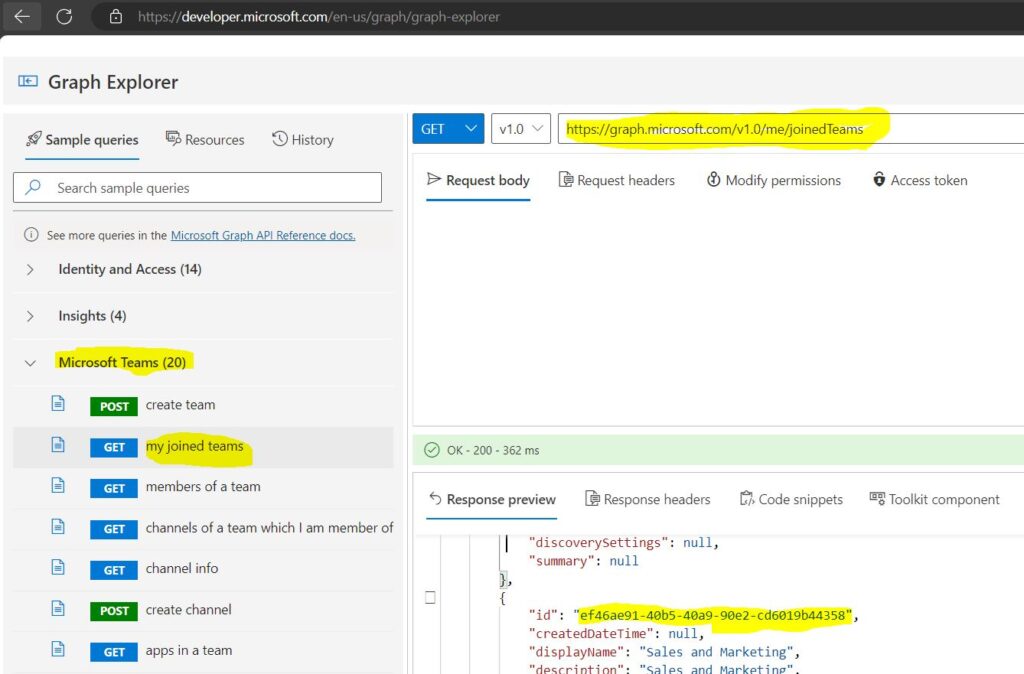

So I ran below Graphs API to get all the Teams I am members of, here we will get the name and the ID of the Teams group as well.

https://graph.microsoft.com/v1.0/me/joinedTeams

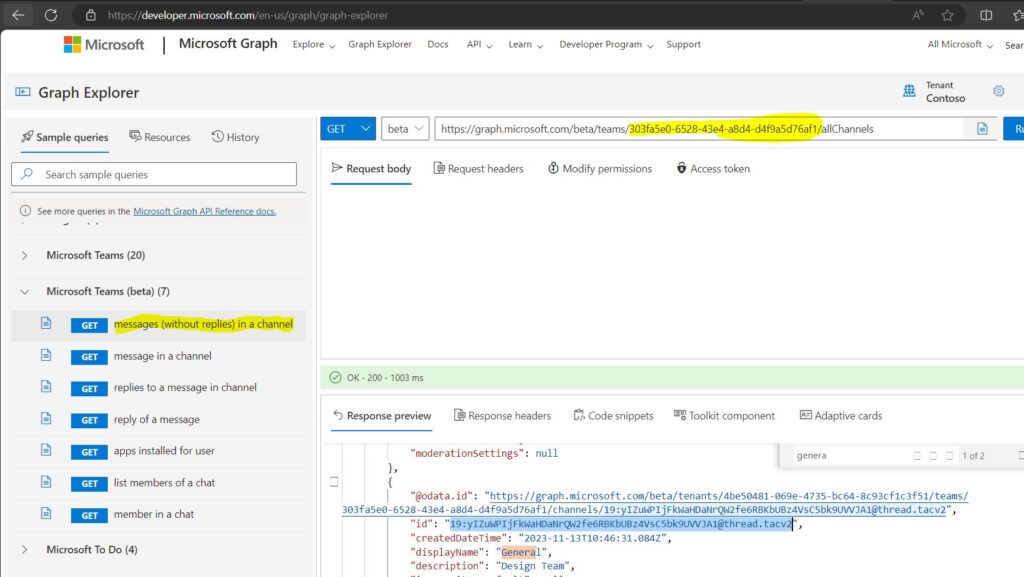

Now we need to use the Group ID in below graphs query to get allchannels in that teams group.

https://graph.microsoft.com/beta/teams/{group-id-for-teams}/Allchannels/

Finally when we have both the Group ID and ChannelID, we will use below graphs API to get all the messages in the ChannelID.

Note: I am only able to see all the unread messages.

https://graph.microsoft.com/beta/teams/{group-id-for-teams}/channels/{channel-id}/messages

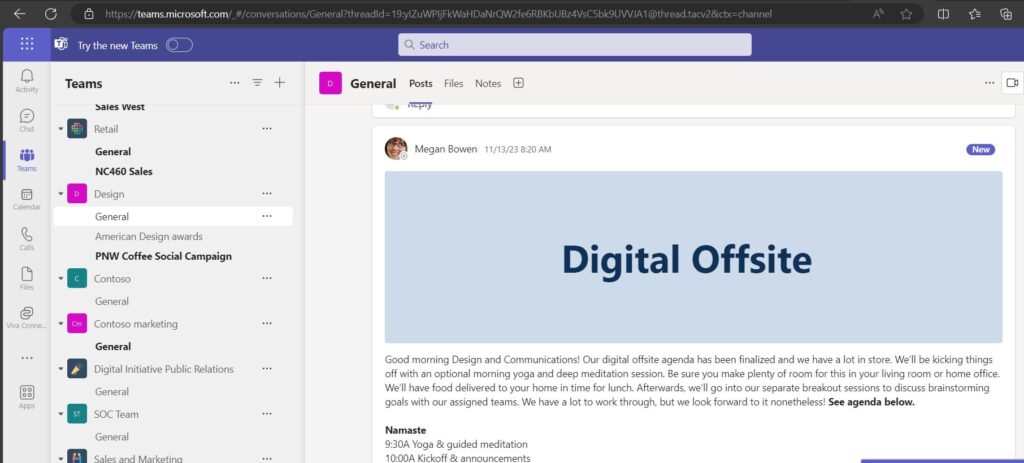

Below I am able to see all the same messages using GUI in teams

Ways to add an item to SharePoint lists

Explained the same in Youtube Video : https://youtu.be/xioKl4KrlLo

To use Graph API to add content to a SharePoint list, you need to have the appropriate permissions, the site ID, the list ID, and the JSON representation of the list item you want to create. You can use the following HTTP request to create a new list item:

POST https://graph.microsoft.com/v1.0/sites/{site-id}/lists/{list-id}/items

Content-Type: application/json

{

"fields": {

// your list item properties

}

}

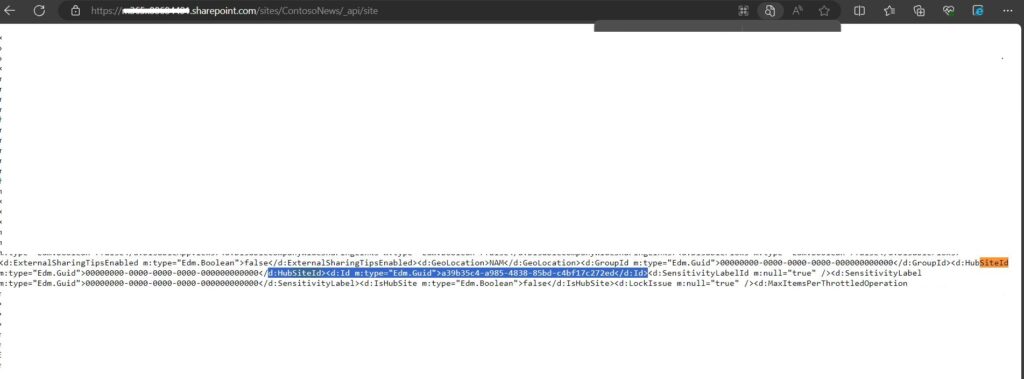

To get site ID, use below URL

https://TenantName.sharepoint.com/sites/SiteName/_api/site

Here at the ID after HUBSiteID tag is actually your Site ID as shown below.

For example, if you want to create a list item with the title “Item2”, the color “Pink”, and the Number 1, you can use this JSON body:

{

"fields": {

"Title": "Item2",

"Color": "Pink",

"Number": 1

}

}

If the request is successful, you will get a response with the created list item and its properties.

You can also use the Graph SDKs to simplify the process of creating list items in different programming languages. For example, here is how you can create a list item in C# using the Microsoft Graph SDK:

using Microsoft.Graph;

using Microsoft.Identity.Client;

// Create a public client application

var app = PublicClientApplicationBuilder.Create(clientId)

.WithAuthority(authority)

.Build();

// Get the access token

var result = await app.AcquireTokenInteractive(scopes)

.ExecuteAsync();

// Create a Graph client

var graphClient = new GraphServiceClient(

new DelegateAuthenticationProvider((requestMessage) =>

{

requestMessage

.Headers

.Authorization = new AuthenticationHeaderValue("bearer", result.AccessToken);

return Task.CompletedTask;

}));

// Create a dictionary of fields for the list item

var fields = new FieldValueSet

{

AdditionalData = new Dictionary<string, object>()

{

{"Title", "Item"},

{"Color", "Pink"},

{"Number", 1}

}

};

// Create the list item

var listItem = await graphClient.Sites[siteId].Lists[listId].Items

.Request()

.AddAsync(fields);

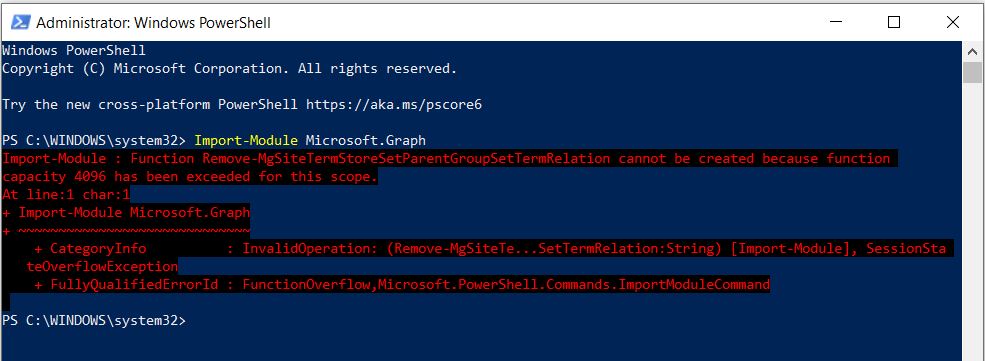

Import-Module : Function Remove-MgSiteTermStoreSetParentGroupSetTermRelation cannot be created because function capacity 4096 has been exceeded for this scope

Explained everything in the Video : https://youtu.be/7-btaMI6wJI

Today I got below error when I tried to import my Graphs module to Powershell (Import-Module Microsoft.Graph)

Import-Module : Function Remove-MgSiteTermStoreSetParentGroupSetTermRelation cannot be created because function capacity 4096 has been exceeded for this scope.

At line:1 char:1

+ Import-Module Microsoft.Graph

+ ~~~~~~~~~~~~~~~~~~~~~~~~~~~~~

+ CategoryInfo : InvalidOperation: (Remove-MgSiteTe...SetTermRelation:String) [Import-Module], SessionSta

teOverflowException

+ FullyQualifiedErrorId : FunctionOverflow,Microsoft.PowerShell.Commands.ImportModuleCommand

After lot of research I identified that this is a known issue that occurs when importing Microsoft.Graph module in PowerShell 5.1, because the module has more than 4096 functions and PowerShell 5.1 has a limit on the number of functions that can be created in a scope.

There are some possible workarounds that you can try to resolve this error:

- Upgrade to PowerShell 7+ or latest as the runtime version (highly recommended). PowerShell 7+ does not have the function capacity limit and can import the module without any errors.

- Set $maximumfunctioncount variable to its max value, 32768, before importing the module. This will increase the function capacity limit for your scope, but it may not be enough if you have other modules loaded or if the Microsoft.Graph module adds more functions in the future.

To check all Maximum value set in PowerShell

gv Max*Count

To set the Maximum Function Count

$MaximumFunctionCount = 8096

I hope this helps you understand how to fix the error and import the Microsoft.Graph module successfully.

Get duplicate files in all SharePoint site using file HASH

Explained everything in the Video : https://youtu.be/WHk2tIav-sQ

The task was to find a way to identify all duplicate files in all SharePoint sites. I searched online for a solution, but none of the scripts I found were accurate. They used different criteria to detect duplicates, such as Name, modified date, size, etc.

After extensive research, I developed the following script that can generate a hash for each file on the SharePoint sites. The hash is a unique identifier that cannot be the same for two files, even if they differ by a single character.

If you want to do the same for only one SharePoint site, you can use below link: Get duplicate files in SharePoint site using file HASH. (itfreesupport.com)

I hope this script will be useful for many people.

Register a new Azure AD Application and Grant Access to the tenant

Register-PnPManagementShellAccess

Then paste and run below pnp script:

Parameters

$TenantURL = “https://tenant-admin.sharepoint.com”

$Pagesize = 2000

$ReportOutput = “C:\Temp\DupSitename.csv”

Connect to SharePoint Online tenant

Connect-PnPOnline $TenantURL -Interactive

Connect-SPOService $TenantURL

Array to store results

$DataCollection = @()

Get all site collections

$SiteCollections = Get-SPOSite -Limit All -Filter “Url -like ‘/sites/‘”

Iterate through each site collection

ForEach ($Site in $SiteCollections)

{

#Get the site URL

$SiteURL = $Site.Url

#Connect to SharePoint Online site

Connect-PnPOnline $SiteURL -Interactive

#Get all Document libraries

$DocumentLibraries = Get-PnPList | Where-Object {$_.BaseType -eq "DocumentLibrary" -and $_.Hidden -eq $false -and $_.ItemCount -gt 0 -and $_.Title -Notin ("Site Pages","Style Library", "Preservation Hold Library")}

#Iterate through each document library

ForEach ($Library in $DocumentLibraries)

{

#Get All documents from the library

$global:counter = 0;

$Documents = Get-PnPListItem -List $Library -PageSize $Pagesize -Fields ID, File_x0020_Type -ScriptBlock `

{ Param ($items) $global:counter += $items.Count; Write-Progress -PercentComplete ($global:Counter / ($Library.ItemCount) * 100) -Activity `

"Getting Documents from Library '$($Library.Title)'" -Status "Getting Documents data $global:Counter of $($Library.ItemCount)";} | Where {$_.FileSystemObjectType -eq "File"}

$ItemCounter = 0

#Iterate through each document

Foreach ($Document in $Documents)

{

#Get the File from Item

$File = Get-PnPProperty -ClientObject $Document -Property File

#Get The File Hash

$Bytes = $File.OpenBinaryStream()

Invoke-PnPQuery

$MD5 = New-Object -TypeName System.Security.Cryptography.MD5CryptoServiceProvider

$HashCode = [System.BitConverter]::ToString($MD5.ComputeHash($Bytes.Value))

#Collect data

$Data = New-Object PSObject

$Data | Add-Member -MemberType NoteProperty -name "FileName" -value $File.Name

$Data | Add-Member -MemberType NoteProperty -Name "HashCode" -value $HashCode

$Data | Add-Member -MemberType NoteProperty -Name "URL" -value $File.ServerRelativeUrl

$Data | Add-Member -MemberType NoteProperty -Name "FileSize" -value $File.Length

$DataCollection += $Data

$ItemCounter++

Write-Progress -PercentComplete ($ItemCounter / ($Library.ItemCount) * 100) -Activity "Collecting data from Documents $ItemCounter of $($Library.ItemCount) from $($Library.Title)" `

-Status "Reading Data from Document '$($Document['FileLeafRef']) at '$($Document['FileRef'])"

}}

}

Get Duplicate Files by Grouping Hash code

$Duplicates = $DataCollection | Group-Object -Property HashCode | Where {$_.Count -gt 1} | Select -ExpandProperty Group

Write-host “Duplicate Files Based on File Hashcode:”

$Duplicates | Format-table -AutoSize

Export the duplicates results to CSV

$Duplicates | Export-Csv -Path $ReportOutput -NoTypeInformation

Get duplicate files in SharePoint site using file HASH

Explained everything in the Video : https://youtu.be/WHk2tIav-sQ

The task was to find a way to identify all duplicate files in a SharePoint site. I searched online for a solution, but none of the scripts I found were accurate. They used different criteria to detect duplicates, such as Name, modified date, size, etc.

After extensive research, I developed the following script that can generate a hash for each file on the SharePoint sites. The hash is a unique identifier that cannot be the same for two files, even if they differ by a single character.

If you want to do the same for all SharePoint sites, you can use below link:

Get duplicate files in all SharePoint site using file HASH. (itfreesupport.com)

I hope this script will be useful for many people.

Register a new Azure AD Application and Grant Access to the tenant

Register-PnPManagementShellAccess

Then paste and run below pnp script:

$SiteURL = “https://tenant.sharepoint.com/sites/sitename”

$Pagesize = 2000

$ReportOutput = “C:\Temp\DupSitename.csv”

Connect to SharePoint Online site

Connect-PnPOnline $SiteURL -Interactive

Array to store results

$DataCollection = @()

Get all Document libraries

$DocumentLibraries = Get-PnPList | Where-Object {$_.BaseType -eq “DocumentLibrary” -and $_.Hidden -eq $false -and $_.ItemCount -gt 0 -and $_.Title -Notin (“Site Pages”,”Style Library”, “Preservation Hold Library”)}

Iterate through each document library

ForEach ($Library in $DocumentLibraries)

{

#Get All documents from the library

$global:counter = 0;

$Documents = Get-PnPListItem -List $Library -PageSize $Pagesize -Fields ID, File_x0020_Type -ScriptBlock { Param ($items) $global:counter += $items.Count; Write-Progress -PercentComplete ($global:Counter / ($Library.ItemCount) * 100) -Activity

“Getting Documents from Library ‘$($Library.Title)'” -Status “Getting Documents data $global:Counter of $($Library.ItemCount)”;} | Where {$_.FileSystemObjectType -eq “File”}

$ItemCounter = 0

#Iterate through each document

Foreach ($Document in $Documents)

{

#Get the File from Item

$File = Get-PnPProperty -ClientObject $Document -Property File

#Get The File Hash

$Bytes = $File.OpenBinaryStream()

Invoke-PnPQuery

$MD5 = New-Object -TypeName System.Security.Cryptography.MD5CryptoServiceProvider

$HashCode = [System.BitConverter]::ToString($MD5.ComputeHash($Bytes.Value))

#Collect data

$Data = New-Object PSObject

$Data | Add-Member -MemberType NoteProperty -name "FileName" -value $File.Name

$Data | Add-Member -MemberType NoteProperty -Name "HashCode" -value $HashCode

$Data | Add-Member -MemberType NoteProperty -Name "URL" -value $File.ServerRelativeUrl

$Data | Add-Member -MemberType NoteProperty -Name "FileSize" -value $File.Length

$DataCollection += $Data

$ItemCounter++

Write-Progress -PercentComplete ($ItemCounter / ($Library.ItemCount) * 100) -Activity "Collecting data from Documents $ItemCounter of $($Library.ItemCount) from $($Library.Title)" `

-Status "Reading Data from Document '$($Document['FileLeafRef']) at '$($Document['FileRef'])"

}}

Get Duplicate Files by Grouping Hash code

$Duplicates = $DataCollection | Group-Object -Property HashCode | Where {$_.Count -gt 1} | Select -ExpandProperty Group

Write-host “Duplicate Files Based on File Hashcode:”

$Duplicates | Format-table -AutoSize

Export the duplicates results to CSV

$Duplicates | Export-Csv -Path $ReportOutput -NoTypeInformation

Download content from others OneDrive using Graphs API

Explained the same using my Youtube channel : https://www.youtube.com/watch?v=YG19odJN94Q

If you want to download content from other users’ OneDrive accounts, you have a few options. The simplest option is to create a OneDrive link for each user using the Office 365 Admin center. This will allow you to access and download their files through a web browser. However, this option is not very efficient if you have to do it for many users. A better option is to use an API call that can create a custom application for you. This application can download the files for multiple users at once, without requiring you to create individual links. To use this option, you need to follow these steps to get the API calls for the custom application.

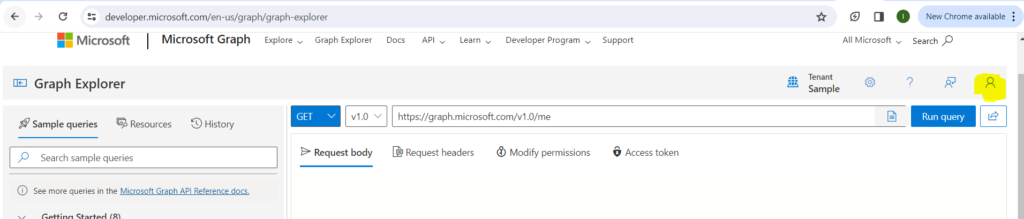

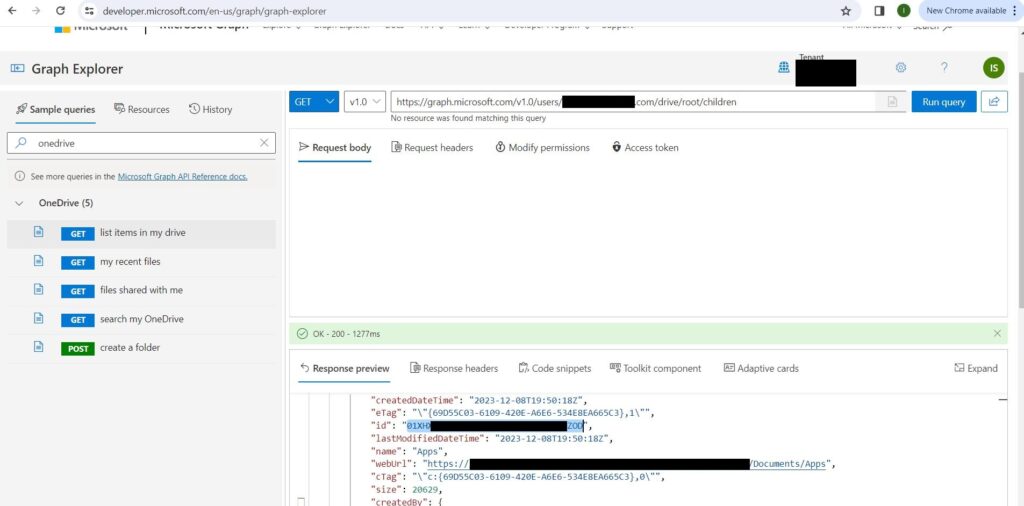

Open Graphs API explorer URL : https://developer.microsoft.com/en-us/graph/graph-explorer

Sign in and give consent to Graphs explorer using the User icon on right corner of the screen

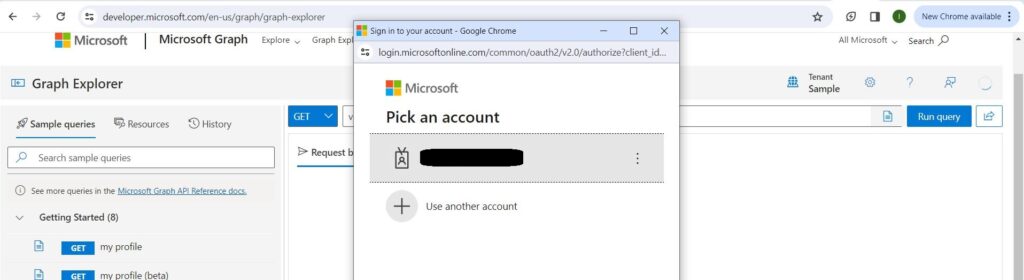

Now type the credentials of the users you want to use and give consent.

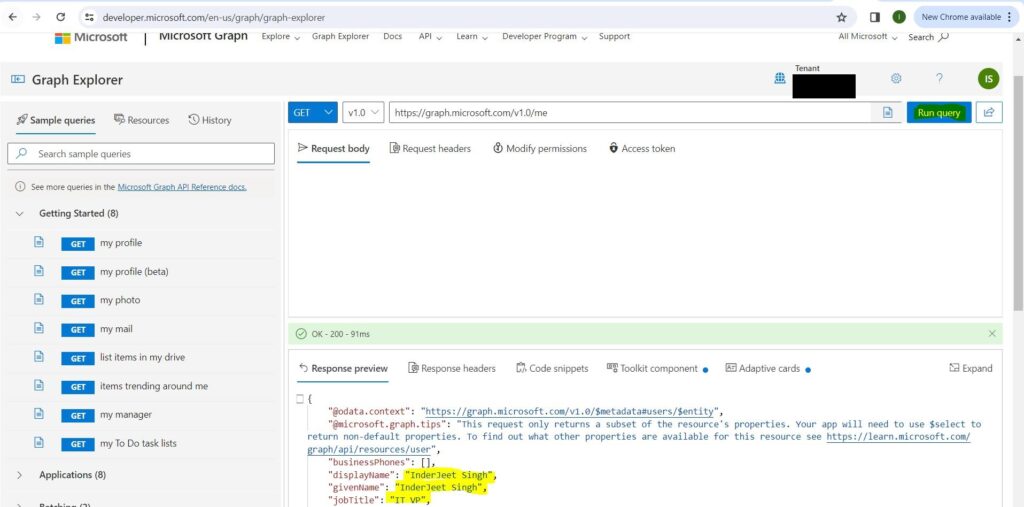

Now try to run the initial API and confirm you get OK 200 and your details in the Response View

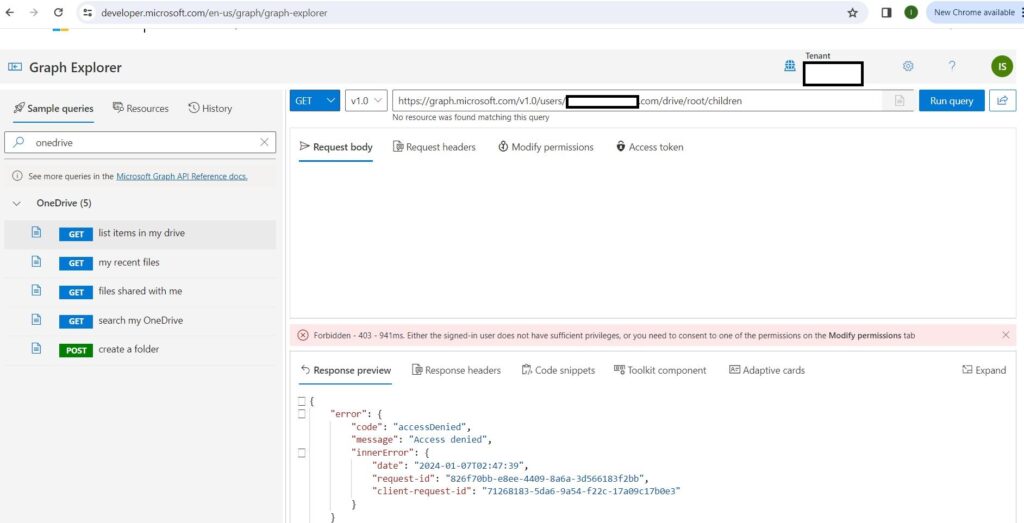

Now the 1st API URL using which you can query other users OneDrive content is https://graph.microsoft.com/v1.0/users/user@domain.com/drive/root/children

Sometimes, when you try to download content from other users’ OneDrive accounts, you may encounter an access denied error. This means that you do not have the permission to access their files. To fix this error, you need to do one of the following things:

- Make sure that you are a Global Admin for the organization. This role gives you the highest level of access to all the resources in the organization, including other users’ OneDrive accounts.

- Grant admin access to other users’ OneDrive accounts by following these steps:

- In the left pane, select Admin centers > SharePoint. (You might need to select Show all to see the list of admin centers.)

- If the classic SharePoint admin center appears, select Open it now at the top of the page to open the SharePoint admin center.

- In the left pane, select More features.

- Under User profiles, select Open.

- Under People, select Manage User Profiles.

- Enter the former employee’s name and select Find.

- Right-click the user, and then choose Manage site collection owners.

- Add the user to Site collection administrators and select OK.

This option allows you to specify which users’ OneDrive accounts you want to access, without requiring you to be a Global Admin.

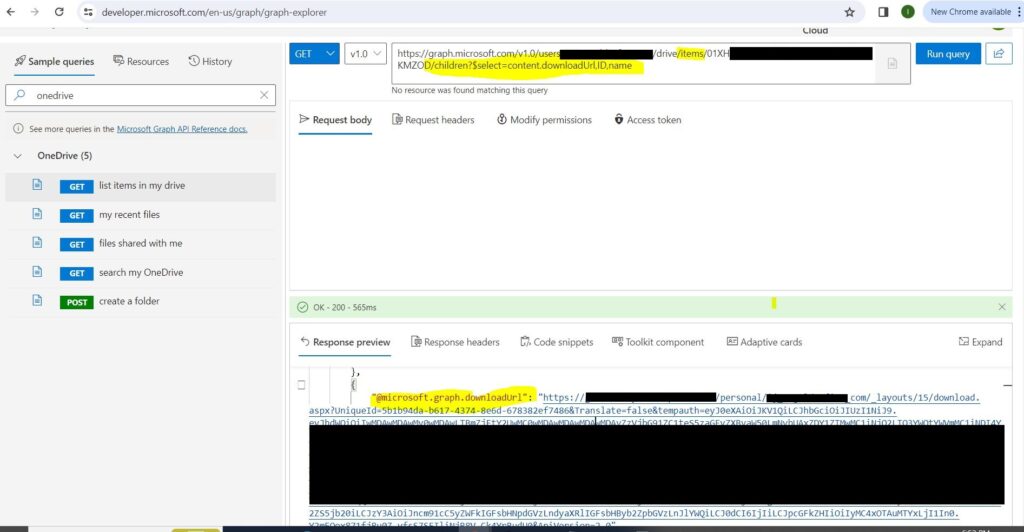

After you successfully execute the above API calls, you will get a response that shows you the list of all the folders and files on the root of the other users’ OneDrive accounts. Each folder and file will have a unique ID that you can use to access its contents. To get the downloadable links for all the files in a specific folder, you need to use the following API calls:

These API calls will return a response that contains the links for each file in the folder. You can download any file by clicking on its link. The file will be saved to your local device.

Office 365 get all sites with its size in GB

Explained everything in the Video : https://youtu.be/rqn1KNYKlhE

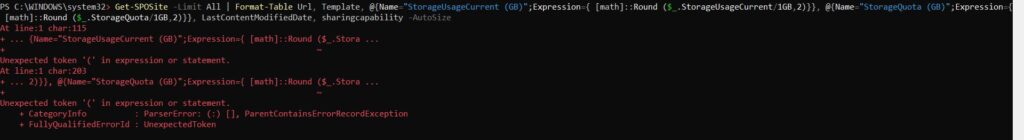

Recently I was asked to get a script or command which can get all sites in Office 365 with its size in GB. I tried to look for ways to do so but could not find anything to help with same. After some research I tried to use ChatGPT and Bard but the scripts didn’t work for me and kept getting error. Same of not working command and its error is shown below:

THIS COMMAND IS NOT WORKING

Get-SPOSite | Select Title, Url, Owner, SharingCapability, LastContentModifiedDate, @{Name=”Size (GB)”;Expression={ [math]::round ($_.length/1GB,4)}} Get-SPOSite | Select Title, Url, Owner, SharingCapability, LastContentModifiedDate, @{Name=”Size (GB)”;Expression={ [math]::round ($_.length/1GB,4)}} | Export-CSV “C:\SharePoint-Online-Sites.csv” -NoTypeInformation -Encoding UTF8

At line:1 char:115

+ ... {Name="StorageUsageCurrent (GB)";Expression={ [math]::Round ($_.Stora ...

+ ~

Unexpected token '(' in expression or statement.

At line:1 char:203

+ ... 2)}}, @{Name="StorageQuota (GB)";Expression={ [math]::Round ($_.Stora ...

+ ~

Unexpected token '(' in expression or statement.

+ CategoryInfo : ParserError: (:) [], ParentContainsErrorRecordException

+ FullyQualifiedErrorId : UnexpectedToken

I did a lot of research and found the issue in above command and a way to get all sites with its size in GB.

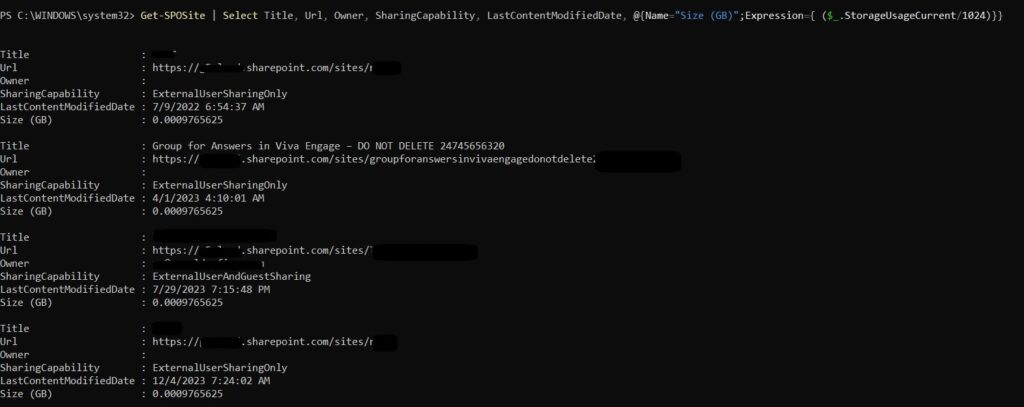

THIS IS WORKING COMMAND

Get-SPOSite | Select Title, Url, Owner, SharingCapability, LastContentModifiedDate, @{Name=”Size (GB)”;Expression={ ($_.StorageUsageCurrent/1024)}}

Also if you want to export the results to CSV file, use below command

Get-SPOSite | Select Title, Url, Owner, SharingCapability, LastContentModifiedDate, @{Name=”Size (GB)”;Expression={ ($_.StorageUsageCurrent/1024)}} | Export-CSV “C:\SharePoint-Online-Sites.csv” -NoTypeInformation -Encoding UTF8

You will also have to connect to your Office subscription, use below commands to do so

Install-Module -Name Microsoft.Online.SharePoint.PowerShell

Connect-SPOService -Url https://YOURTENANTNAE-admin.sharepoint.com

Note if you are using GCC or GCCHIgh, add ‘-ExchangeEnvironmentName O365UsGovGCCH’ in above ConnectSPOservice command

SharePoint Residency: What You Need to Know

SharePoint Online, a cloud-based service, empowers organizations to create, share, and govern content, knowledge, and applications. It is part of the comprehensive Microsoft 365 suite, comprising Exchange Online, OneDrive for Business, Microsoft Teams, and other integral services. However, customers’ data residency requirements and preferences vary significantly. Some necessitate or desire storing their SharePoint Online data in a particular country or region, owing to factors such as compliance, performance, or sovereignty. To address this diversity, Microsoft offers distinct options for SharePoint Residency:

- Data Residency Commitments

- Advanced Data Residency

- Multi-Geo Capabilities

In this article, we will explain what each option means, how to purchase and use them, and what are the benefits and limitations of each option.

Data Residency Commitments

Data Residency Commitments serve as the default choice for SharePoint Online customers who enlist in Microsoft 365 within the Local Region Geography, the European Union, or the United States. This means that their SharePoint Online data will be stored in the same country or region as their sign-up location, unless stated otherwise in the Privacy and Security Product Terms. For instance, a customer registering for Microsoft 365 in Canada can anticipate their SharePoint Online data being housed in Canada, unless they opt for an alternative.

- SharePoint Online site content and the files stored within that site

- Files uploaded to OneDrive for Business

- Microsoft 365 Video services

- Office in a browser

- Microsoft 365 Apps for enterprise

- Visio Pro for Microsoft 365

This option encompasses various types of SharePoint Online data such as documents, lists, and files, among others. It is seamlessly integrated into the Microsoft 365 subscription and is applicable to all users in the tenant. Nevertheless, it does not ensure that the SharePoint Online data will always stay within the country or region of origin, as there might be exceptional circumstances where Microsoft accesses or relocates the data for operational or legal reasons.

Advanced Data Residency

For those desiring greater authority over their data residency, there exists the option of Advanced Data Residency. This supplementary choice caters to SharePoint Online customers seeking extended control and assurance regarding their data residency. With Advanced Data Residency, customers gain access to expanded coverage for Microsoft 365 workloads and customer data, committed data residency for local country or region datacenter regions, and prioritized tenant migration services. Essentially, this empowers customers to specify a particular datacenter region within their Local Region Geography or Expanded Local Region Geography for housing their SharePoint Online data, with Microsoft observing a policy of not moving or accessing their data outside that defined region, except when mandated by law or with the customer’s explicit consent.

The Advanced Data Residency option covers the following types of SharePoint Online data, in addition to the ones covered by the Data Residency Commitments option:

- Microsoft Teams

- Microsoft Defender for Office P1 and Exchange Online Protection

- Viva Connections

- Viva Topics

- Microsoft Purview Audit (Standard and Premium)

- Data Retention

- Microsoft Purview Records Management

- Sensitivity Labels

- Data Loss Prevention

- Office Message Encryption

- Information Barriers

The Advanced Data Residency option requires an additional purchase and configuration. Customers must meet the following prerequisites to be eligible to purchase the Advanced Data Residency add-on:

- The Tenant Default Geography must be one of the countries or regions included in the Local Region Geography or Expanded Local Region Geography, such as Australia, Brazil, Canada, France, Germany, India, Israel, Italy, Japan, Poland, Qatar, South Korea, Norway, South Africa, Sweden, Switzerland, United Arab Emirates, and United Kingdom.

- Customers must have licenses for one or more of the following products: Microsoft 365 F1, F3, E3, or E5; Office 365 F3, E1, E3, or E5; Exchange Online Plan 1 or Plan 2; OneDrive for Business Plan 1 or Plan 2; SharePoint Online Plan 1 or Plan 2; Microsoft 365 Business Basic, Standard or Premium.

- Customers must cover 100% of paid seats in the tenant with the Advanced Data Residency add-on license for the tenant to receive data residency for the Advanced Data Residency workloads.

Customers can purchase the Advanced Data Residency add-on through their Microsoft account representative or partner. After purchasing the add-on, customers can request a tenant migration to their preferred datacenter region through the Microsoft 365 admin center or by contacting Microsoft support. The migration process may take several weeks or months, depending on the size and complexity of the tenant. During the migration, customers may experience some temporary impacts on their SharePoint Online services, such as video playback, search, or synchronization.

Multi-Geo Capabilities

Multi-Geo Capabilities is another add-on option for SharePoint Online customers who have a global presence and need to store their SharePoint Online data in multiple countries or regions, to meet different data residency requirements or preferences across their organization. With Multi-Geo Capabilities, customers can assign users of SharePoint Online and OneDrive for Business to any Satellite Geography supported by Multi-Geo, and their SharePoint Online data will reside in India, Japan, Norway, South Africa, South Korea, Switzerland, United Arab Emirates, United Kingdom, and United States. Customers can also use the Default Geography as a Satellite Geography, if it is different from their Tenant Default Geography.

The Multi-Geo Capabilities option does not guarantee that the SharePoint Online data will never leave the Satellite Geography, as there may be some scenarios where the data may be accessed or moved by Microsoft for operational or legal purposes. For more information, see the Location of Customer Data at Rest for Core Online Services section in the Privacy and Security Product Terms.