Archive for the ‘.NET’ Category

Delete Jobs API to support bulk delete in Azure Digital Twins

The Delete Jobs API doesn’t remove any types of entities, like endpoints, routes or jobs. It’s a part of the data plane APIs within Azure Digital Twins, which are used to manage the elements in an instance. The Delete Jobs API has some characteristics; It’s a feature that aims to make data deletion easier and more efficient for Azure Digital Twins users.

Using the Delete Jobs API brings benefits, including improved security and privacy measures, enhanced performance and efficiency well, as simplified management and operation. By utilizing the Delete Jobs API users can accomplish their goals. Fulfill their requirements while saving time, money and effort. This feature is definitely worth exploring for users who have complex datasets on their Azure Digital Twins instance.

The Delete Jobs API has the following characteristics:

- It requires an operation-id header, which is a unique identifier for the job’s status monitor. The operation-id can be generated by the user or by the service if the header is not passed by the user.

- It supports an optional timeoutInMinutes query parameter, which specifies the desired timeout for the delete job. Once the specified timeout is reached, the service will stop any delete operations triggered by the current delete job that are in progress and go to a failed state. This will leave the instance in an unknown state, as there is no rollback operation.

- It returns a 202 Accepted status code, along with an Operation-Location header, which contains the URL to monitor the status of the job. The response body also contains the job details, such as the id, createdDateTime, finishedDateTime, purgeDateTime, and status.

- It supports four possible statuses for the job: notstarted, running, succeeded, or failed.

- It supports only one bulk delete job at a time within an instance. If the user tries to initiate another delete job while one is already in progress, the service will return a 400 Bad Request status code, along with a JobLimitReached error code.

- The Delete Jobs API is a useful feature that enables users to delete all models, twins, and relationships on their Azure Digital Twins instance in a simple and convenient way. The Delete Jobs API offers several benefits, such as:

- Enhanced security and privacy: The Delete Jobs API allows users to remove their data from the instance when they no longer need or own it, or when they need to comply with regulations that mandate data deletion. The Delete Jobs API also encrypts the data in transit and at rest, and allows users to control access and permissions by using Azure Active Directory and Azure Role-Based Access Control.

- Improved performance and efficiency: The Delete Jobs API reduces the complexity and effort of deleting data manually or programmatically, as it allows users to delete all models, twins, and relationships with a single API call. The Delete Jobs API also improves the performance and efficiency of the instance, as it frees up space and resources for new data and operations.

- Simplified management and operation: The Delete Jobs API leverages the existing capabilities and integrations of Azure Digital Twins, which means that users do not need to deploy or maintain any additional hardware or software for their data deletion. The Delete Jobs API also enables users to monitor and manage their delete jobs from anywhere, by using Azure Portal, Azure CLI, or Azure PowerShell.

The Delete Jobs API is a feature that is worth trying out and exploring, especially for users who have large or complex data sets on their Azure Digital Twins instance. The Delete Jobs API is a feature that can potentially transform the way users use data deletion in Azure Digital Twins.

Encryption with customer-managed keys in Azure Health Data Services

Azure Health Data Services provides an compliant environment, for storing and processing health data. It offers features, including encryption, auditing, role based access control and data protection. By default Azure Health Data Services ensures that the data stored in its underlying Azure services like Azure Cosmos DB, Azure Storage and Azure SQL Database is encrypted using keys managed by Microsoft.

Microsoft managed keys refer to encryption keys that are created and handled by Microsoft on behalf of the customer.

These keys provide an hassle free method of encrypting data without requiring any setup or maintenance, from the customers end.

When customers enable encryption with customer-managed keys for their Azure Health Data Services account, they can specify an Azure Key Vault key URI, which is a unique identifier for their encryption key. Azure Health Data Services then passes this key URI to the underlying Azure services, such as Azure Cosmos DB, Azure Storage, and Azure SQL Database, which use the customer-managed key to encrypt and decrypt the data. Azure Health Data Services also uses the customer-managed key to encrypt and decrypt the data in transit, such as when the data is transferred between Azure services or between Azure and the customer’s applications.

Encryption with customer-managed keys offers several benefits for customers, such as:

- Enhanced security and privacy: Encryption with customer-managed keys adds a second layer of encryption on top of the default encryption with Microsoft-managed keys, which means that the data is encrypted twice. This provides an extra level of protection and assurance for the data, as it prevents unauthorized access or disclosure, even if the Microsoft-managed keys are compromised. Encryption with customer-managed keys also enables customers to control and monitor the access and usage of their encryption keys, by using Azure Key Vault or Azure Key Vault Managed HSM features, such as access policies, logging, and auditing.

- Improved compliance and governance: Encryption with customer-managed keys helps customers to meet their specific security or compliance requirements, such as HIPAA or GDPR, that mandate the use of customer-managed keys. Encryption with customer-managed keys also enables customers to demonstrate their compliance and governance to their stakeholders, such as regulators, auditors, or customers, by using Azure Key Vault or Azure Key Vault Managed HSM features, such as reports, certificates, or attestations.

- Simplified management and operation: Encryption with customer-managed keys leverages the existing capabilities and integrations of Azure Key Vault and Azure Key Vault Managed HSM, which means that customers do not need to deploy or maintain any additional hardware or software for their encryption keys. Encryption with customer-managed keys also allows customers to use the same encryption keys for multiple Azure services, which simplifies the management and operation of their encryption keys.

Encryption with customer-managed keys is currently in public preview, which means that it is available for testing and evaluation purposes, but not for production use.

Encryption with customer-managed keys is a promising feature that aims to make encryption easier and better for customers who use Azure Health Data Services. Encryption with customer-managed keys offers several advantages, such as enhanced security and privacy, improved compliance and governance, and simplified management and operation. Encryption with customer-managed keys can help customers to achieve their goals and requirements, while saving time, money, and effort. Encryption with customer-managed keys is a feature that is worth trying out and exploring, especially for customers who have sensitive or confidential health data. Encryption with customer-managed keys is a feature that can potentially transform the way customers use encryption in Azure Health Data Services.

What Is Windows Communication Foundation(WCF)

Windows Communication Foundation (WCF) is a .NET framework for developing, configuring and deploying services. It is introduce with .NET Framework 3.0.It is service oriented technology used for exchanging information. WCF has combined feature of .NET Remoting, Web Services and few other communications related technology.

Key Feature of WCF:

- Interoperable with other Services

- Provide better reliability and security compared to ASMX web services.

- No need to make much change in code for implementing the security model and changing the binding. Small changes in the configuration will make your requirements.

Difference between WCF and Web service

| Web Service | WCF |

|---|---|

| It can be hosted in IIS | It can be hosted in IIS, windows activation service, Self-hosting, Windows service. |

| [WebService] attribute has to be added to the class and [WebMethod] attribute represents the method exposed to client

Example. [WebService] public class myService: System.Web.Services.Webservice { [WebMethod] }

|

[ServiceContract] attribute has to be added to the class and [OperationContract] attribute represents the method exposed to client

Example: [ServiceContract] public Interface InterfaceTest { [OperationContract] public class myService:InterfaceTest { public string Test() return “Hello! WCF”; }

|

| Can be access through HTTP | Can be access through HTTP, TCP, Named pipes. |

| One-way, Request- Response are the different operations supported in web service. | One-Way, Request-Response, Duplex are different type of operations supported in WCF. |

| System.Xml.Serialization name space is used for serialization. | System.Runtime.Serialization namespace is used for serialization. |

| Can not be multi-threaded. | Can not be multi-threaded. |

| For binding it uses SOAP or XML | Support different type of bindings (BasicHttpBinding, WSHttpBinding, WSDualHttpBinding etc ) |

WCF Services has three important companent.

- Service Class – A WCF service class implements some service as a set of methods.

- Host Environment- It can be a application or a Service or a Windows Forms application or IIS as in case of the normal asmx web service in .NET.

- Endpoints –All the WCF communications are take place through end point.

Endpoints consist of three component Address, Binding and contract. They collectively called as ABC’s of endpoints.

Address: It is basically url address where WCF services is hosted.

Binding: Binding will describes how client will communicate with service.

Binding supported by WCF

|

Binding |

Description |

|

BasicHttpBinding |

Basic Web service communication. No security by default |

|

WSHttpBinding |

Web services with WS-* support. Supports transactions |

|

WSDualHttpBinding |

Web services with duplex contract and transaction support |

|

WSFederationHttpBinding |

Web services with federated security. Supports transactions |

|

MsmqIntegrationBinding |

Communication directly with MSMQ applications. Supports transactions |

|

NetMsmqBinding |

Communication between WCF applications by using queuing. Supports transactions |

|

NetNamedPipeBinding |

Communication between WCF applications on same computer. Supports duplex contracts and transactions |

|

NetPeerTcpBinding |

Communication between computers across peer-to-peer services. Supports duplex contracts |

|

NetTcpBinding |

Communication between WCF applications across computers. Supports duplex contracts and transactions |

|

BasicHttpBinding |

Basic Web service communication. No security by default |

|

WSHttpBinding |

Web services with WS-* support. Supports transactions |

Contract: The endpoints specify a Contract that defines which methods of the Service class will be accessible via the endpoint; each endpoint may expose a different set of methods. It is standard way of describing what the service does.

Mainly there are four types of contracts available in WCF.

- Service Contract: describe the operation that service can provide.

- Data Contract: describes the custom data type which is exposed to the client.

- Message Contract: WCF uses SOAP message for communication. Message Contract is used to control the structure of a message body and serialization process. It is also used to send / access information in SOAP headers. By default WCF takes care of creating SOAP messages according to service DataContracts and OperationContracts.

- Fault Contract: Fault Contract provides documented view for error occurred in the service to client. This help as to easy identity the what error has occurred, and where. By default when we throw any exception from service, it will not reach the client side.

Creating WCF service in visual studio 2013:

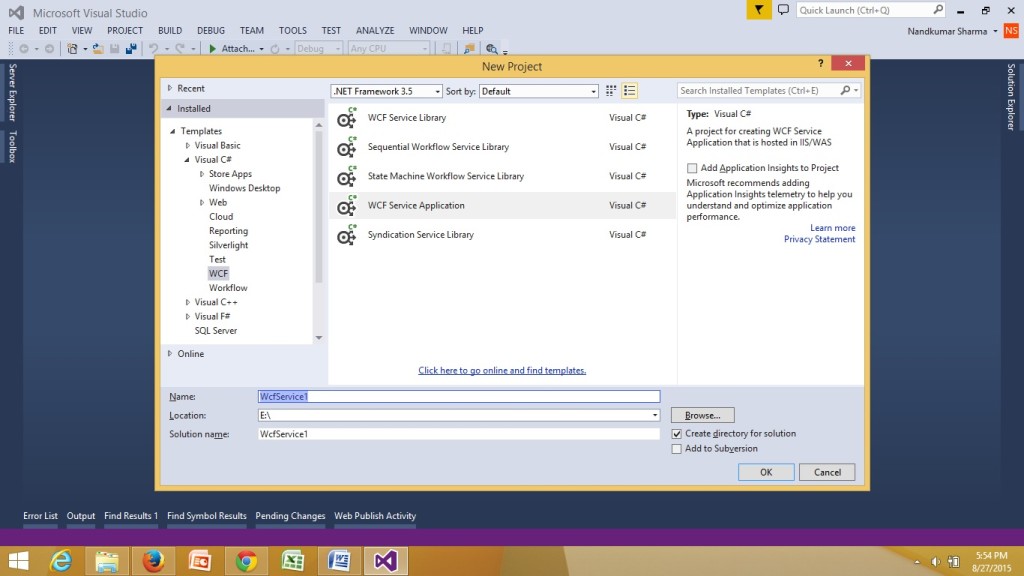

- First open Visual Studio 2013. Create a new project and select WCF Service Application and give it the name WcfService1.

- Delete default created IService1.cs and Service1.svc file.

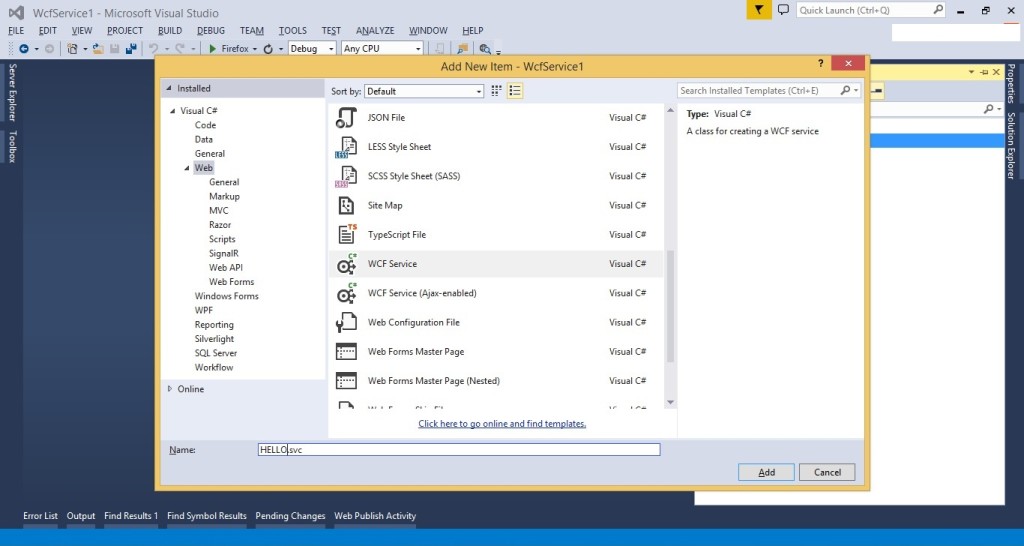

- Right-click on the project and select “Add” | “New Item…”

- From the Web tab choose WCF Service to add.

- give the service the name “HELLO.svc”

- Open IHELLO.cs and remove the “void DoWork()”.

IHELLO.cs

using System;

using System.Collections.Generic;

using System.Linq;

using System.Runtime.Serialization;

using System.ServiceModel;

using System.Text;

namespace WcfService1

{

// NOTE: You can use the “Rename” command on the “Refactor” menu to change the interface name “IHELLO” in both code and config file together.

[ServiceContract]

public interface IHELLO

{

[OperationContract]

string sayHello();

}

}

- Configure Endpoints with Metadata

To do this open the Web.config file. We are going to create one Endpoint with basicHttpBinding. We are adding a Endpoint also to configure the metadata of the service.

<services>

<service behaviorConfiguration=”WcfService1.HELLOBehavior” name=”WcfService1.HELLO”>

<endpoint address=”” binding=”basicHttpBinding” contract=”WcfService1.IHELLO”/>

<endpoint address=”mex” binding=”mexHttpBinding” contract=”IMetadataExchange” />

</service>

</services>

- Implement Service. HELLO.svc.cs

using System;

using System.Collections.Generic;

using System.Linq;

using System.Runtime.Serialization;

using System.ServiceModel;

using System.Text;

namespace WcfService1

{

public class HELLO : IHELLO

{

public string sayHello()

{

return “Hello! welcome to WCF”;

}

}

}

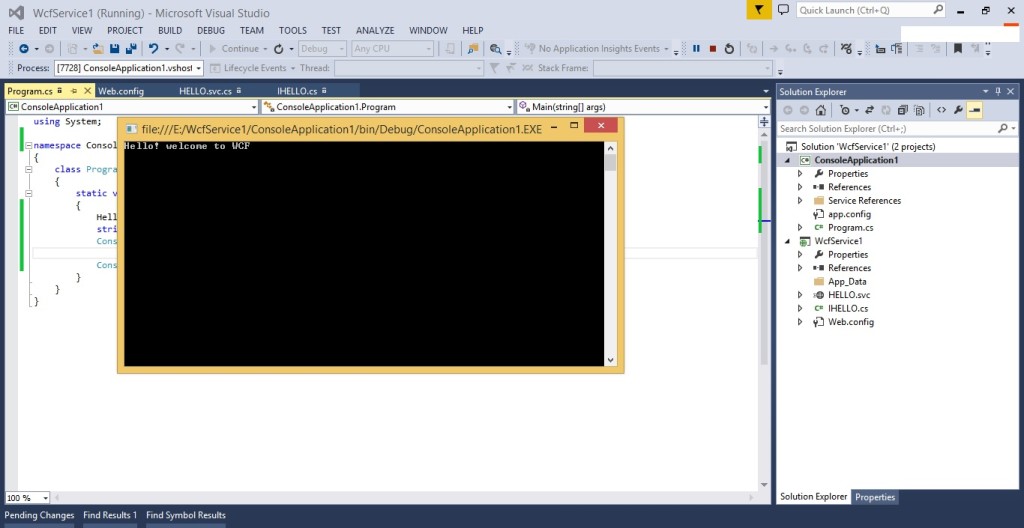

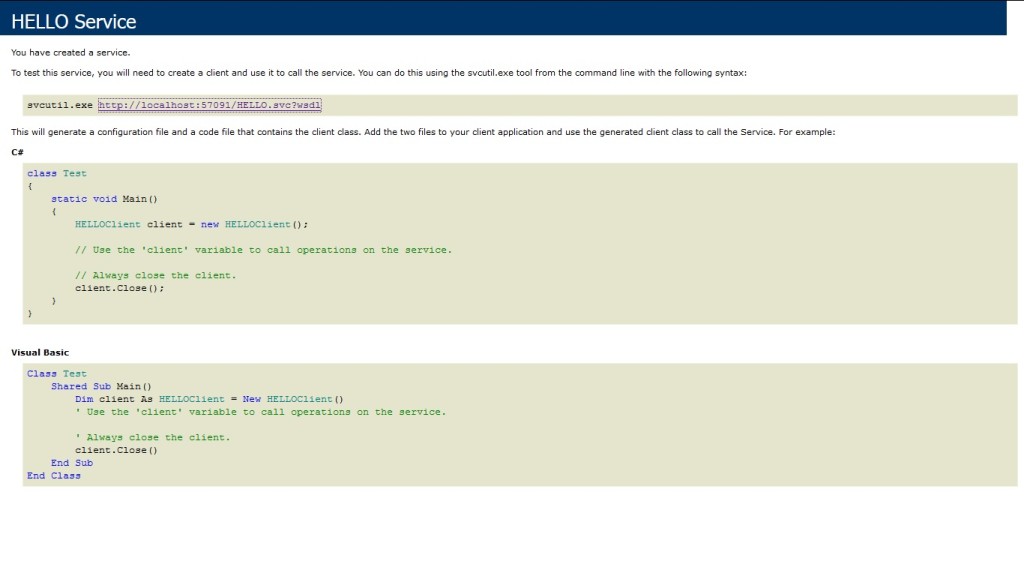

Now we have created the Service and configured the Endpoint.To host it press F5 in Visual Studio.

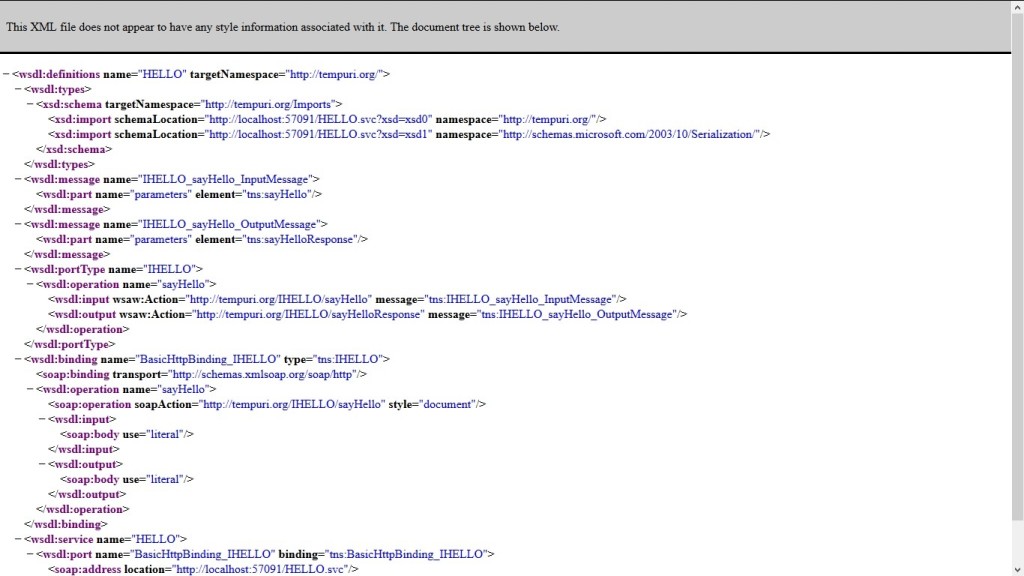

In the browser you will see the Service as in the following.  To view the metadata of the Service click on the WSDL URL.

To view the metadata of the Service click on the WSDL URL.

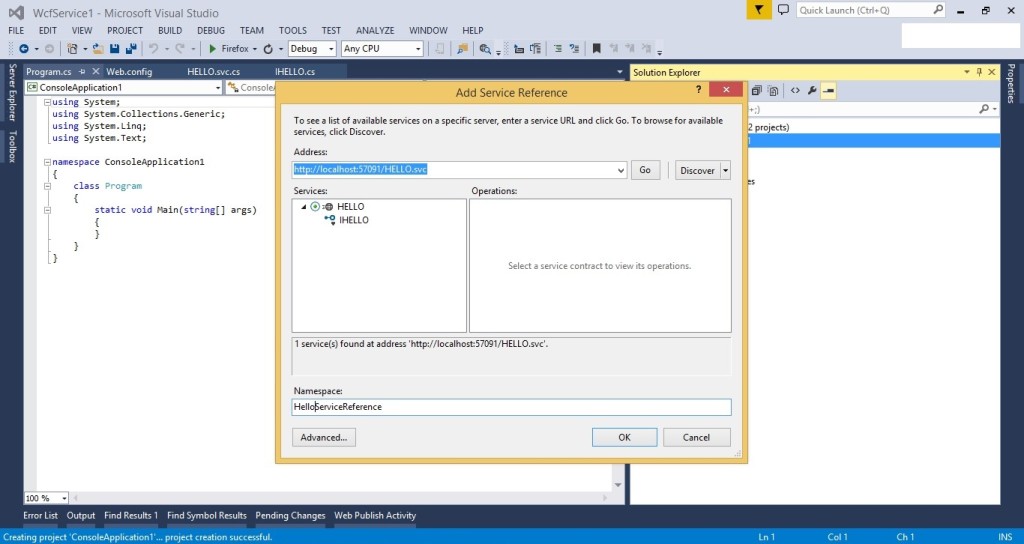

In Windows / Web application we can consume this WCF service by using “Add Service Reference”->Add Service Address “http://localhost:57091/HELLO.svc” -> Click on Go.

I had created console application and added service reference.

Program.cs

using System;

namespace ConsoleApplication1

{

class Program

{

static void Main(string[] args)

{

HelloServiceReference.HELLOClient oHell = new HelloServiceReference.HELLOClient();

string str= oHell.sayHello();

Console.WriteLine(str);

}

}

}

Related helpful links

https://msdn.microsoft.com/en-us/library/ms731082(v=vs.110).aspx

I hope this will help you.Your valuable feedback and comments are important for me.

Caching in MVC 5 Asp.net

Hello Everyone,

In this blog I want to share advantages of caching in MVC. Its a very important to know how caching is used in MVC websites.

By using Caching, we can store content at client’s browser and/or server. We can easily access these data and content when required.Caching data or content provides following advantages:

- Minimize Request To Hosting Server

- Minimize Request To Database Server

- Reduce Network Traffic

- Save Time and resources

- Above advantages helps in improving the performance of MVC Website.

There are two types of method for caching available in MVC.

- Page Output Caching

- Application Caching

In this blog we will discuss on Page Output Caching.We can achieve this by

adding OutputCache attribute to either an individual controller action or an

entire controller class.

OutputCache Filter Parameter :

| Parameter | Type | Description |

|---|---|---|

| CacheProfile | string | It is the name of the output cache profile which is defined with in tag of Web.config. |

Example: Creating Cache profile in cache.

<caching>

<outputCacheSettings>

<outputCacheProfiles>

<add name=”Test1″ duration=”30″ varyByParam=”none”/>

</outputCacheProfiles>

</outputCacheSettings>

</caching>

And this cache profile is used in the action method as shown below.

[OutputCache(CacheProfile = “Test1”)]

public ActionResult Index()

{

return View();

}

| Parameter | Type | Description |

|---|---|---|

| Duration | int | It specify the duration in sec to cache the content. |

Example : The output of the Index() action is cached for 30 seconds.

using System.Web.Mvc;

namespace MvcApplication1.Controllers

{

public class HomeController : Controller

{

[OutputCache(Duration=30, VaryByParam=”none”)]

public ActionResult Index()

{

return View();

}

}

}

Note: If you will not defined the duration, it will cached it for

by default cache duration 60 sec.

| Parameter | Type | Description |

|---|---|---|

| Location | OutputCacheLocation | It specify the location of the output to be cached. Location property can be any one of the following values:

|

Example: Cache stored at client browser.

using System.Web.Mvc;

using System.Web.UI;

namespace MvcApplication1.Controllers

{

public class UserController : Controller

{

[OutputCache(Duration=3600, VaryByParam=”none”, Location=OutputCacheLocation.Client)]

public ActionResult Index()

{

return View();

}

}

}

| Parameter | Type | Description |

|---|---|---|

| VaryByParam | string | This property enables us to create different cached versions of the very same content when a form parameter or query string parameter varies. If not specified, the default value is none. VaryByParam=”none” specifies that caching doesn’t depend on anything. |

| VaryByCustom | string | This is used for custom output cache requirements |

| VaryByHeader | string | This specify list of HTTP header names that are used tovary the cache content. |

Example:

public class TestController : Controller

{

[OutputCache(Duration = 30, VaryByParam = “none”)]

public ActionResult Index()

{

return View();

}

[OutputCache(Duration = 30, VaryByParam = “id”)]

public ActionResult Index1()

{

return View();

}

}

| Parameter | Type | Description |

|---|---|---|

| NoStore | bool | It enable/disable where to use HTTP Cache-Control. This is used only to protect very sensitive data. |

| SqlDependency | string | It specify the database and table name pairs on which the cache content depends on. The cached data will expire automatically when the data changes in the database. |

For more information visit here

I hope this will help you.Your valuable feedback and comments are important for me.

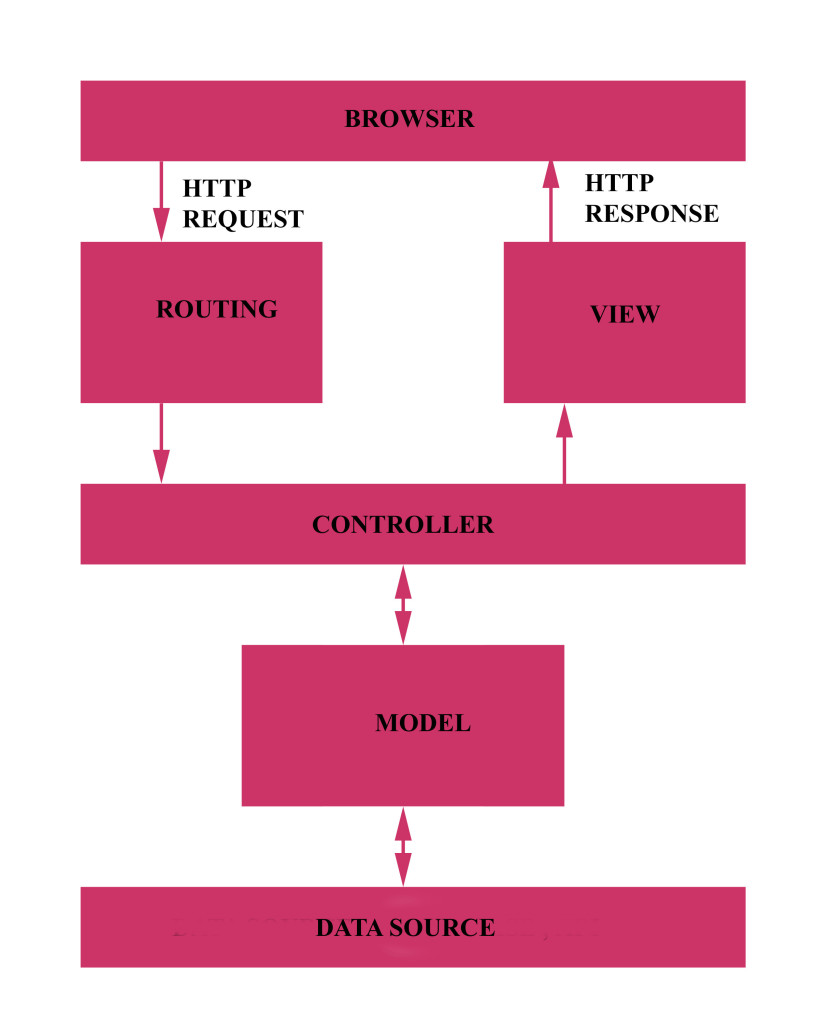

ASP .NET MVC Life Cycle

Hello Everyone,

In this blog we will discuss life cycle for ASP.NET MVC. Before starting with MVC, it’s a very important to know that how does your request process.

In above figure I tried to show how MVC application process. Your browser sends and receive request in form of HTTP Request and HTTP Response respectively. Routing is a process in which it analyzes the request and invokes an Action of the appropriate Controller. Action calls appropriate method of the model.The Model communicates with the data source (e.g. database or API). Once the Model completes its operation it returns data to the Controller which then loads the appropriate View. The View executes presentation logic using the supplied data. In the end, an HTTP response is returned to the browser.This is just a quick view of MVC life-cycle.

Let’s see details view of MVC life cycle.

This is performed by following steps.

- Receive first request for the application: An MVC application contains only one Route Table. The Route Table maps particular URLs to particular controllers. Check below code of Global.asax: Routes objects are add to RouteTable object when application starts first.The Application_Start() method is invokes only once when the very first page is requested.

public class MvcApplication : System.Web.HttpApplication

{

public static void RegisterGlobalFilters(GlobalFilterCollection filters)

{

filters.Add(new HandleErrorAttribute());

}

public static void RegisterRoutes(RouteCollection routes)

{

routes.IgnoreRoute(“{resource}.axd/{*pathInfo}”);

routes.MapRoute(

“Default”, // Route name

“{controller}/{action}/{id}”, // URL with parameters

new { controller = “Home”, action = “Index”, id = UrlParameter.Optional } // Parameter defaults

);

}

protected void Application_Start()

{

AreaRegistration.RegisterAllAreas();

RegisterGlobalFilters(GlobalFilters.Filters);

RegisterRoutes(RouteTable.Routes);

}

}

- Perform routing : When we make a request in MVC, UrlRoutingModule reads these request to create a RequestContext object.When requested URL found in RouteTable, the Routing engine forwards the request to the corresponding IRouteHandler for that request. The default one calls the MvcHandler. The routing engine returns a 404 HTTP status code against that request if the URL patterns is not found in the Route Table.

- Create MVC request handler: MVC handler implements IHttpHandler interface and further process the request by using ProcessRequest method.When ProcessRequest() is called on the MvcHandler object created, a new controller is created, as show in below code.

- Create and Execute controller: The controller is created from a ControllerFactory. This is an extensibility point since you can create your own ControllerFactory. The default ControllerFactory is named, appropriately enough, DefaultControllerFactory.The RequestContext and the name of the controller are passed to the ControllerFactory. CreateController() method to get a particular controller. Next, a ControllerContext object is constructed from the RequestContext and the controller. Finally, the Execute() method is called on the controller class. The ControllerContext is passed to the Execute() method when the Execute() method is called. For reference check below code.

virtual void ProcessRequest(HttpContextBase httpContext)

{

SecurityUtil.ProcessInApplicationTrust(delegate {

IController controller;

IControllerFactory factory;

this.ProcessRequestInit(httpContext, out controller, out factory);

try

{

controller.Execute(this.RequestContext);

}

finally

{

factory.ReleaseController(controller);

}

});

}

- Invoke action:The Execute() method finds a action of the controller to execute. Controller class can be created by us and Execute() method finds one of the methods that we write into the controller class and executes it.

- Execute result: Action method of controller receives the user input data, process these data and returns result. The built-in result types can be ViewResult, RedirectToRouteResult, RedirectResult, ContentResult, JsonResult, FileResult, and EmptyResult.

For more details Visit HERE

I hope this will help you.Your valuable feedback and comments are important for me.

Getting started with MVC ASP .NET – Part 2

Hello Everyone,

In my previous blog , I tried to explain basic details related to MVC Framework.

Now, I would like to share detailed view of RAZOR engine , ASPX engine and various file and folder in shown in Solution Explorer.

What is RAZOR and ASPX ?

Razor as well as ASPX are View Engine. View Engine are responsible for rendering view into HTML form to browser. MVC supports both Web Form(ASPX) as well as Razor. Now, Asp.net MVC is open source and can work with other third party view engines like Spark, Nhaml.

What are difference between in RAZOR and Web Form(ASPX)?

| RAZOR | Web Form(ASPX) |

|---|---|

| Razor View Engine is an advanced view engine and introduced with MVC3. This is not a language but it is a markup syntax. | ASPX View Engine is the default view engine for the ASP.NET MVC that is included with ASP.NET MVC from the beginning. |

| The namespace for Razor Engine is System.Web.Razor. | The namespace for Webform(ASPX) Engine is System.Web.Mvc.WebFormViewEngine. |

| It has .cshtml with C# or .vbhtml with VB extension for views, partial views, editor templates and for layout pages. | It has .aspx extension for views, .ascx extension for partial views & editor templates and .master extension for layout/master pages. |

| RAZOR is much easier and cleaner than Web Form. It uses @ symbol in coding. e.g.@Html.ActionLink(“Test”, “Test”) |

ASPX Uses <% and %> delimiter in coding. e.g. <%: Html.ActionLink(“Test”, “Test”) %> |

| RAZOR engine comparatively slow but provides better security than ASPX.Razor Engine prevents XSS attacks(Cross-Site Scripting Attacks) means it encodes the script or html tags like <,> before rendering to view. | Web Form is comparatively faster but less secure than RAZOR.Web Form Engine does not prevent XSS attacks means any script saved in the database will be fired while rendering the page |

| Razor Engine, doesn’t support design mode in visual studio means you cannot see your page look and feel without running application. | Web Form engine support design mode in visual studio means you can see your page look and feel without running the application. |

| Razor Engine support TDD (Test Driven Development) since it is not depend on System.Web.UI.Page class. | Web Form Engine doesn’t support TDD (Test Driven Development) since it depend on System.Web.UI.Page class which makes the testing complex. |

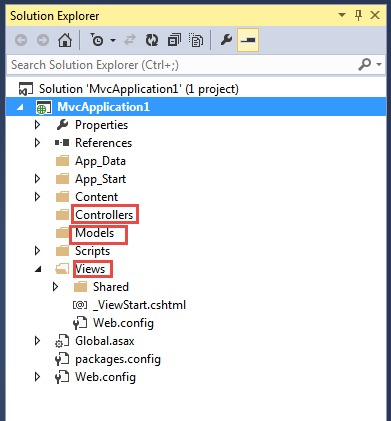

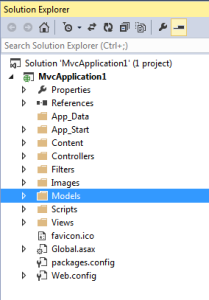

What are different file and folder in MVC application.

MVC has default folder structure as show in below figure.MVC Framework follows naming conventions such as model will be in model folder, controller will be in controller folder, view will be in folder and so on. This naming method reduces code and makes easy for developer to understand the architecture.

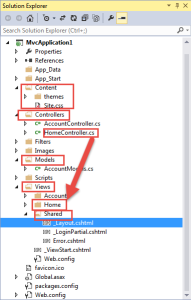

The App_Data is used for storing application data.

The Content folder is used for static files like style sheets, themes, icons and images.

The Controllers folder contains the controller classes responsible for handling user input and responses.

The Models folder contains the classes that represent the application models. Models hold and manipulate application data.

The Views folder stores the HTML files related to the view of the application.The Views folder contains one folder for each controller and shared folder. The Shared folder is used to store views which is shared among the application like _Layout.cshtml.

The Scripts folder stores the JavaScript files of the application.

For more details you can visit here.

I hope this will help you.

Your valuable feedback and comments are important for me.

Getting Started with MVC ASP.net

Hello everyone,

I would like to share a basic knowledge about MVC in ASP.net.

What is MVC.

The MVC (Model-View-Controller) is an architectural pattern for managing business logic and implementing user interface separately. It divides application into three components.

Model: It represents data into database.e.g. Database table. It helps us implement your business logic and validations for your application. Model is associated with view and controller.

View: It represent user interface of your application. User interface is created based on model.View is used for displaying information.It also have various control like text-box , label, drop-down etc..

Controller: Controller is a important bridge between View and model. It is heart of MVC. Controller can access and use the model object data and pass data to views by using ViewData.

Why we need MVC?

- MVC separates your application in three separate component. It helps development team to split application, so that each developer can do work separately, without any dependency.

- It provides flexible and systematic way to handle your application.

- It reduces time for development. As we know power is directly proportional to time.When time increase for project it need more power. It saves company’s power and money as well as developer can get more free time.

For More Details Visit Link:

https://msdn.microsoft.com/en-us/library/dd381412%28v=vs.108%29.aspx

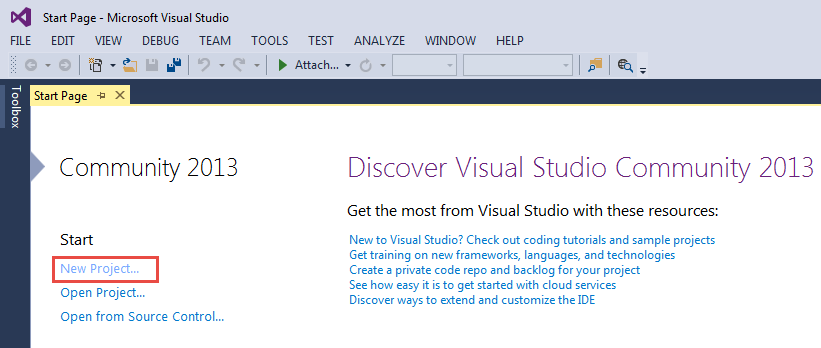

Create Sample Project in MVC ASP .NET.

It’s very simple to create MVC application in just few step.

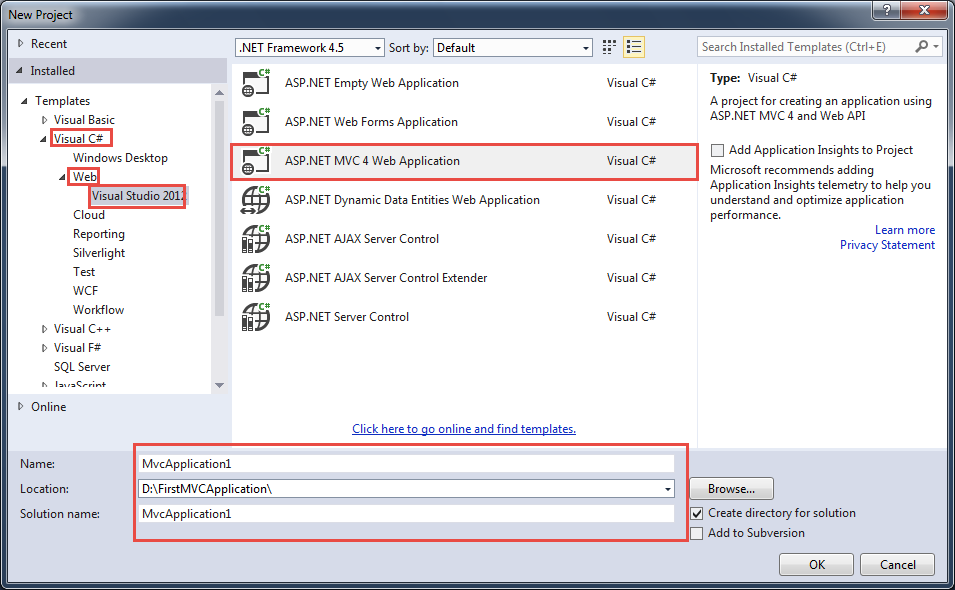

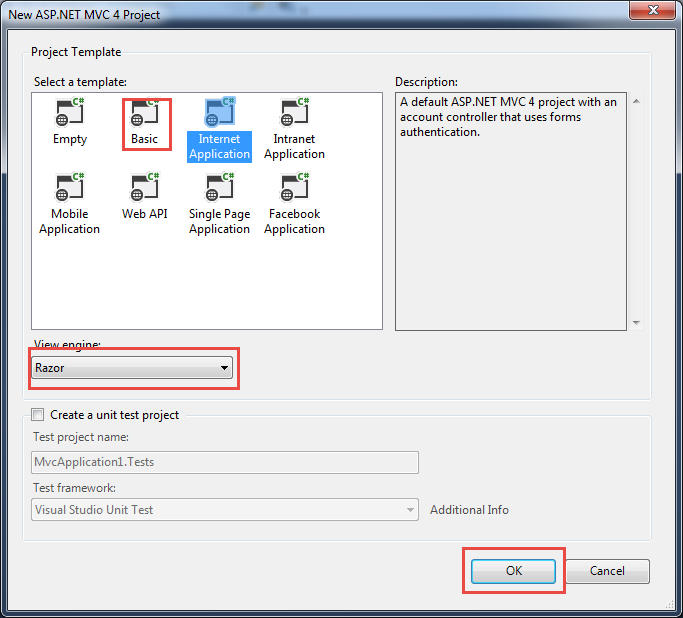

- Open Visual studio 2013 , click on file , Click new project.

- Expand Visual C# node , click on Web and click on ASP.WEB Application , provide name and application path and then press ok.

- Click on basic, check view engine then click on ok.

- Here your MVCapplication in created

For more details http://www.asp.net/mvc/overview/getting-started/introduction/getting-started

Also check : https://www.youtube.com/watch?v=Lp7nSImO5vk

I hope this will help you.

What Is New In Visual studio .Net 2015?

It’s a excited moment for .Net’s lovers. Microsoft has announced that they are

upcoming with Visual studio 2015.I’m excited to share that what will be feature

of VS 2015, how much it cost and where can we download it ?

Visual Studio 2015 Feature

VS .Net 2015 open source and cross platform.

VS 20015 .NET will run on various versions of Window operating system(e.g Windows 7, Windows 8 etc)as well as on MAC and Linux.It will be both open source and supported by Microsoft. It will happen at GitHub.

ASP.NET 5 will work everywhere.

ASP.NET 5 will be easily executed on Windows, Mac, and Linux. Mac and Linux support will come soon and it’s all going to happen in the open on GitHub.

As we know ASP .NET need web server for execution i.e. IIS in windows OS,kestrel built on libuv will works as a web server for Mac and Linux.

Developers should have a great experience with Visual Studio Community.

VS 2015 will have new free SKU Visual Studio for open source developers and students called Visual Studio Community.It supports extensions and lots more all in one download. This is not Express. This is basically Pro.

Create Application For Any Device.

Visual Studio 2015 Preview facilitates developers to build applications and services for any device and on any platform.

If you want more feature details, visit below links.

- http://news.microsoft.com/2014/11/12/microsoft-takes-net-open-source-and-cross-platform-adds-new-development-capabilities-with-visual-studio-2015-net-2015-and-visual-studio-online/

- https://www.visualstudio.com/en-us/news/release-archive-vso.aspx#build_numbers

Cost and Download links:

Visual Studio Community 2015 with Visual Studio Community 2013 and will remain free. Visual Studio Professional 2015 with MSDN will cost $1,199 and correspond fairly directly with Video Studio Professional 2013 with MSDN.

For more details visit Cost and Details.

Also if you wants to download click here: Visual Studio 2015 CTP 6 .

What is .NET.

.NET(Network Enabled Technology) is a software framework created by Microsoft that primarily runs on windows operating system.NET provides tools and libraries that allow developers to develop applications and services much easily, faster and secure by using a convenient way.

The .NET Framework is designed to fulfill the following objectives:

- To provide a consistent object-oriented programming environment whether object code is stored and executed locally, executed locally but Internet-distributed, or executed remotely.

- To provide a code-execution environment that minimizes software deployment and versioning conflicts.

- To provide a code-execution environment that promotes safe execution of code, including code created by an unknown or semi-trusted third party.

- To provide a code-execution environment that eliminates the performance problems of scripted or interpreted environments.

- To make the developer experience consistent across widely varying types of applications, such as Windows-based applications and Web-based applications.

- To build all communication on industry standards to ensure that code based on the .NET Framework can integrate with any other code.

The main two components of .Net Framework are Common Language Runtime (CLR) and .Net Framework Class Library (FCL).

Common Language Runtime (CLR)

CLR is the heart of .net Framework.The Common Language Runtime (CLR) is an Execution Environment.The common language runtime manages memory, thread execution, code execution, code safety verification, compilation, and other system services.

It has four main features :

CIL(Common Intermediate Language):During compilation of code, the source code is translated into CIL code rather than into platform- or processor-specific object code. CIL is a CPU- and platform-independent instruction set that can be executed in any environment supporting the Common Language Infrastructure,such as the .NET runtime on Windows, or the cross-platform Mono runtime. In theory, this eliminates the need to distribute different executable files for different platforms and CPU types. CIL code is verified for safety during runtime, providing better security and reliability than natively compiled executable files.

The execution process looks like this:

- Source code is converted to CIL

- CIL is then assembled into a form of so-called bytecode and a CLI assembly is created.

- Upon execution of a CLI assembly, its code is passed through the runtime’s JIT compiler to generate native code. Ahead-of-time compilation may also be used, which eliminates this step, but at the cost of executable-file portability.

- The computer’s processor executes the native code.

Code Verification:The runtime also enforces code robustness by implementing a strict type-and-code-verification infrastructure called the common type system (CTS). Common Type System (CTS) describes a set of types that can be used in different .Net languages in common . That is , the Common Type System (CTS) ensure that objects written in different .Net languages can interact with each other.For Communicating between programs written in any .NET complaint language, the types have to be compatible on the basic level .These types can be Value Types or Reference Types . The Value Types are passed by values and stored in the stack. The Reference Types are passed by references and stored in the heap. Common Type System (CTS) provides base set of Data Types which is responsible for cross language integration. The Common Language Runtime (CLR) can load and execute the source code written in any .Net language, only if the type is described in the Common Type System (CTS) .

Code Access Security:The runtime enforces code access security. For example, users can trust that an executable embedded in a Web page can play an animation on screen or sing a song, but cannot access their personal data, file system, or network. The security features of the runtime thus enable legitimate Internet-deployed software to be exceptionally feature rich.

Garbage Collection:The .NET Framework’s garbage collector manages the allocation and release of memory for your application. Each time you create a new object, the common language runtime allocates memory for the object from the managed heap. As long as address space is available in the managed heap, the runtime continues to allocate space for new objects. However, memory is not infinite. Eventually the garbage collector must perform a collection in order to free some memory. The garbage collector’s optimizing engine determines the best time to perform a collection, based upon the allocations being made. When the garbage collector performs a collection, it checks for objects in the managed heap that are no longer being used by the application and performs the necessary operations to reclaim their memory.

.Net Framework Class Library (FCL)

The .NET Framework class library is a collection of reusable types that tightly integrate with the common language run-time.It consists of namespaces, classes, interfaces, and data types included in the .NET Framework.

The .NET FCL forms the base on which applications, controls and components are built in .NET. It can be used for developing applications such as console applications, Windows GUI applications, ASP.NET applications, Windows and Web services, workflow-enabled applications, service oriented applications using Windows Communication, XML Web services, etc.

.NET framework is a huge ocean.Hopefully, this article has explained some of the terms in the .NET platform and how it works.

For more details visit this link :

https://msdn.microsoft.com/en-us/library/hh425099%28v=vs.110%29.aspx

The .NET Core is now (OSS) Open Source Software

Satya Nadella is seriously changing the company. It doesn’t take a genius to realize that tech companies need to stop forcing their will manifested in managerial meetings down the customers throats and change it to be the other way around. Collaboration is now the new key success.

Microsoft not only did .NET get open sourced, they accepted the FIRST COMMUNITY PR TOO. This is an epic day. To get more details of this .NET (OSS) Open Sourced Software

https://github.com/dotnet/corefx/pull/31

MS committed a long time ago not to bring patent suits over the .NET specifications (the ECMA CIL specifications). This means anyone can freely implement the .NET specifications (which define the languages, platform, etc., but not all of the APIs for things like WinForms, ASP .Net, etc.), this is why Monopoly exists. They committed to this something like 10 years ago, and they have never violated that promise.

What happened today, is that they’ve opened the implementation, not just the specification. Which is awesome-sauce. But I just want to emphasize that parts of the platform (the specs) have been open for a long time.

As per my opinion Microsoft’s .NET is a very mature, very complete programming world.

- Great IDE

- Great language (C#, VB.NET I guess too)

- Can create extremely robust windows applications

- Can create great web applications (using asp.net webforms if you’re old school and ASP.NET MVC if you’re in the new stuff)

- Azure support “baked in” which greatly simplifies going to the cloud

- Free version of SQL Server that is extremely powerful (SQL Server Express, includes reporting services and full text indexing) and has, again, arguably the best tooling support of any RDBMS

Historically this has all been limited to the Windows stack (has to run on a windows server and developed on a windows computer, with expensive licenses). This move (and the previous moves leading up to this, and the vNext stuff coming) is beginning to tear down this restriction.